Are we impressed by the wrong things in AI?

Our expectations for AI are often wrong, and that can be a problem.

Quick, answer this: what’s harder, folding a t-shirt or solving a Rubik’s cube?

Like almost everyone else, you probably answered that folding the shirt is easier. But if you build robots at work, the answer is obviously reversed. We can build robots that solve Rubik’s cubes in the blink of an eye, but you’ve never been able to buy a Robot Laundry Buddy.

The same is true in AI: experts have one set of expectations, and people out-of-the-loop have another. Impressive AI capabilities can seem boring, boring things can seem impressive. Unfortunately for outsiders, getting the most out of AI has heavily depended on how accurate your expectations are. Most ordinary technologies are ones we intentionally engineered to be intuitive. AI is not like this– a better way to think about it is that deep learning models are like creatures you grow in a lab, and correspondingly more complicated than simpler mechanical things like a steering wheel in a car. Getting good mental models for communicating with an alien-like brain is a lot more complicated than figuring out how to drive.

I think this means the field (and everyone else) is going to have to reckon with this divergence in expectations sooner or later. I basically can see three possible outcomes, two bad and one good, which I’ll call the Uneven World, the Overhang World, and the Good Ending.

The Uneven World

In the uneven world, AI continues getting really good at some things, superhuman even, but still fails catastrophically in ways no human would. It can help solve advanced math research problems but still can’t reliably book an entire vacation’s worth of plane tickets, hotels, and activities for you. People in different fields end up with wildly different perspectives on what AI can do, how powerful it is, and how to even go about using it most effectively.

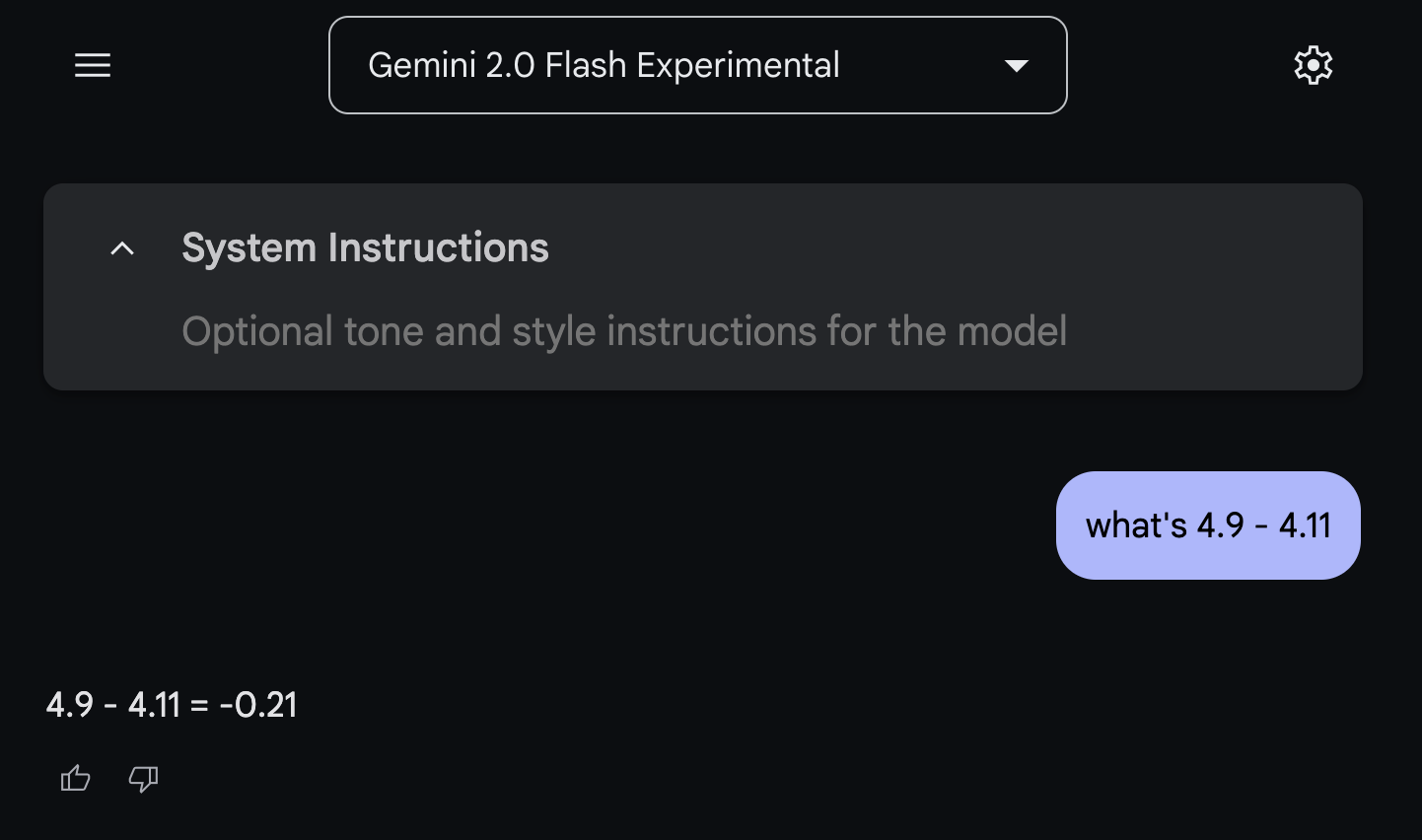

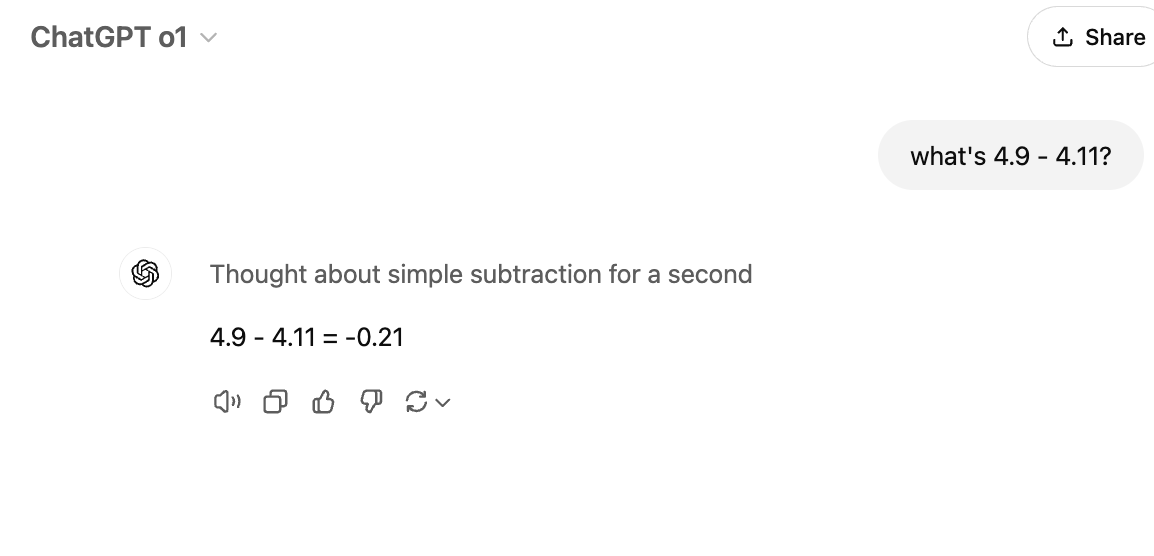

One nice thing about AI in the past few years is it’s remarkably easy to just go and test out things. If you doubt that AI consistently makes a particular type of mistake, you can actually go try it and see that many models do actually say 4.9 - 4.11 = -0.21

The most common mistake I see is basically having a bad theory of mind for what AI is and approximately how it works: it writes like a human so it thinks like a human (wrong); it works like Google (wrong); it types fast so it must be smart (wrong); it can detect what was AI-generated versus human-generated (extremely wrong); it can tell you how it feels or thinks internally (wrong); AI isn’t smart because it can’t really do your shopping or laundry or taxes (let’s just put it this way: if someone locked YOU inside a computer without internet, would you be able to do shopping or laundry or taxes?)1.

In the end, we might just live in the world where Moravec’s paradox continues to hold true. We could discover that “difficult” things, like research mathematics and solving tricky logic puzzles, end up being easy for the AI, while seemingly easy perception and motor tasks, like walking around or cooking dinner, continue to be much harder. Maybe AI ends up wildly exceeding expectations for the software and computer science folks, while biologists and poets are left sadly disappointed when it fails to say anything new about our soft, organic, human bodies. I see a lot of AI-focused software engineers report a magical moment when the computer suddenly just understands what it is we want to do, and comes up with an elegant solution for a thorny challenge.

What a tragedy it would be if we never have that moment in other fields! If our expectations for AI diverge more and more, and those most able to drive AI progress end up disconnected from everything it has yet to achieve– if all the novel reasoning capabilities end up failing to aid our medicine, leaving every arthritic Grandpa and expectant mom-to-be to face nature’s cruelties alone– if it never helps us discover the music of the cosmos– well, perhaps we do live in the timeline where we’re truly still on our own, but I sure hope that’s not true.

The Overhang World

In this world, we have a giant AI overhang where the models can do a lot of surprising things but the rest of society hasn’t caught up. Living through this timeline will feel like running off a cliff overhang: everything about our current trajectory looks fine until we step over the edge, and then the world we know comes crashing down all around us.

A literal overhang situation, as demonstrated by Wile E. Coyote moments before he falls into the deep canyon below.

How might an AI overhang happen? By default, most people don’t work with AI closely. Maybe they tried a primitive model from two years ago, not realizing that for the past decade in AI, one year feels like an eternity of breakthroughs, and they just never realize how far the state-of-the-art has moved.

Or maybe they might see some press release about an exciting new AI model and go try it. They don’t realize that they’re not chatting with a full-fledged AI product with tools like internet access and memory built-in, but rather just the raw brainpower using these tools2. They ask it to “tell me if this used car price is fair” (unassisted language models can sometimes be notoriously unreliable with numbers or grounding in reality), or “tell me if this homework essay was AI-generated” (it doesn’t magically know what’s computer-generated or not), or “what’s the wait time at this restaurant” (it can’t access the internet), or “remind me about this conversation tomorrow” (it has no memory between chats). They get disappointed when it can’t do these seemingly simple tasks (even if the AI is vastly superhuman in other areas they also care about), and assume it just doesn’t work.

And then one day someone figures out how to do product design and make the user experience intuitive and solve the problem of mismatched expectations - and suddenly we’re over the cliff, and all the old inflexible ways become obsolete by a vastly cleverer engine.

Here’s how you’ll know if we’re living in this world: for a long time it will seem to you that AI is just this toy, and then one day it seems like it’s consumed the entire culture and now AI is embedded everywhere. Suddenly your computer can talk back to you like a regular person, the cars start driving themselves, just about every schoolteacher you know starts complaining about their students cheating on everything, medical patients talk to the chatbot instead of going to the trouble of making doctor’s appointments, some lonely people start taking their phone out on a date (but surely not anyone you personally know, no way, definitely not, right?), and everything just starts feeling really weird, really fast.

I know this can sound a bit exaggerated, so let’s consider a more boring example that already happened: remember Chegg? Chegg is a company with lots of textbook and homework answers, so students used its services to help with doing their homework and studying for tests (or cheating, if we’re being totally frank about what a lot of students used it for).

A lot of the initial decline can be explained by the hit that the software industry took in 2022. However, if you look past that, you’ll notice that some companies have recovered in the age of chatbots that can answer all your mundane homework questions and others… did not.

Importantly, this outcome does not require AI progress to accelerate very much– or even continue at all! Even if AI progress completely stopped after OpenAI released GPT-4 in March 2023, we would still need years and years to properly integrate it into the rest of our technology and culture. Do you think frivolous lawsuits are bad? How about when anyone can tell their computer to generate a hundred thousand complaints and ask the courts to read through every single one? Is the law too complicated? How about when everyone has a magical Text Summarizer that reads the law for them? Do you get a lot of scam phone calls? How about when anyone can have the computer generate a voice that sounds exactly like you, a voice that says you’ve been kidnapped and pleads with your family to pay ransom? All this, and more, are tectonic shifts we’ll be dealing with for years and decades to come.

The Good Ending

It’s inevitable that our entire culture is going to shift in response to AI, but the overhang world’s crash landing is not the only possible outcome. The good ending, in my mind is that we get some sort of transition period where we can gradually bridge the gap in our varying expectations for AI.

Concretely, I think one major area that AI-focused people can improve on is accepting that there’s a lot of “boring” work that needs to be done to show what the models can do. It’s not fun to acknowledge that mostly everyone else cares more about whether this hyped-up bot can plan their vacation to Paris than the fact that we’ve nearly saturated GPQA 3. Success will be the difference between “AI can do a lot of things, including planning your vacation to Paris” and “here’s a thing that specifically plans your vacation to Paris, and it’s really good because of AI”. It’ll be the difference between hiring a janitor, which happens a lot, and hiring Generic Physical Worker, which no one says. It’s not fun to acknowledge that by default mostly nobody cares about the tsunami that’s about to get unleashed upon the rest of the world, but if you’ve been paying attention to how things have been going, it’s probably not your first time swallowing a bitter pill.

Meanwhile, if you consider yourself an outsider, I think the most important thing is to keep double-checking what you believe. Prefer your direct personal experience over popular journalism or other people’s suggestions. Frequently double-check what you assume, especially if it informs how you think about AI laws and decision-making. There was an infamous paper4 from 2023 saying that AI-generated data would be bad for training more powerful AI. Turns out that this is empirically false, but that hasn’t stopped people from repeating the paper’s conclusion over and over again while all the frontier AI labs energetically build one powerful synthetic dataset after another. If your expectations for AI were based on that paper and you tried to regulate AI by passing a copyright-related law, it would be practically obsolete before it even became law!

Ultimately, the best way to really get an intuition for what AI language models can do is to talk to them directly as though you’re an explorer in uncharted territory. No filtered journalism or second-hand hearsay will replace you directly playing around, asking imaginative questions, running experiments, and drawing conclusions. Can you imagine a world where robots actually can fold clothes as reliably as they can solve Rubik’s cubes? What would have to happen for that to be true? For the first time in our history, you have something clearly non-human in front of you, and it can talk to you. Talk to it!

Notes

1: It can be hard to tell apart who knows their stuff and who’s just producing spam and hype! If you’re truly at a loss as to where to start, just go read everything Ethan Mollick has written on the subject. He’s honest, doesn’t exaggerate, and has a good eye for the practical side of things.

2: Modern AI products often do have memory, internet access, and other capabilities - but these come from carefully engineered systems built around the core AI models, not from the models themselves. This distinction might seem academic, but it’s crucial for understanding both the real limitations and possibilities of AI technology. When you use a chatbot that remembers your previous conversations, you’re actually interacting with a complex system of databases, retrieval mechanisms, and other tools that work together with the AI model - not just the model itself.

3: GPQA (Graduate-Level Google-Proof Q&A Benchmark) refers to an extremely challenging benchmark testing AI models’ ability to answer PhD-level science questions that average humans would completely fail at, even if they had access to the internet and AI themselves. Most people haven’t heard of it, and that’s the point– most of us don’t care about AI evaluations or run into graduate-level science questions in our everyday lives.

4: It’s worth noting that the model used in this study was literally a thousand times smaller than today’s data generation models. There’s now an active field of research focused on making these datasets more reliable and more suitable for training new AI systems.

Thank you to Claude 3.5 Sonnet for helping me revise and proofread. It is truly a remarkable model.