Prompt engineering for humans

On June 11, 2020, OpenAI released onto the internet the first version of GPT-3, a large language model (LLM) capable of performing many tasks without being explicitly programmed to do so. It made waves far beyond the artificial intelligence research community.

Since then, we’ve discovered many techniques for working with LLMs, among them “prompt engineering”, a method involving carefully crafting the text input we give to the models. For example, a prompt for generating a short story might go: “The following is a children’s story about a dog who can talk to cats.” It turns out that certain ways of writing prompts would get better results. For example, specifying “for children” would mean the output probably has simpler language and an easy-to-follow plot. It’s not that changing your prompt would make the artificial intelligence any smarter– it already was capable of generating that text (and much more!)– the key is in how the prompt was written, which guides the AI just enough for it to produce a desired output.

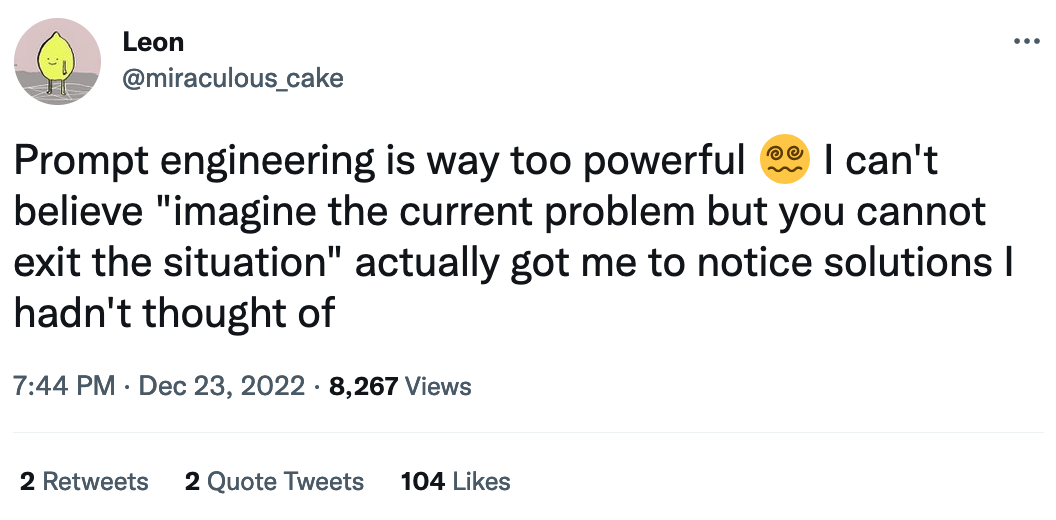

Then I had an idea: if AI could be prompted just via text, could… we be prompted the same way? Could we use certain questions as prompts to extract useful solutions from our own heads? After all, a lot of the time, we overlook solutions right in front of us. A surprising amount of problems are solvable but unsolved because we’re missing a way to draw out the solutions that are already in our minds.

And so I tried it. It worked.

“imagine you have no choice but to solve your problems, now go solve them”

(I’m being intentionally vague with details here because I’d prefer to keep them private, but luckily this seems to be a very general trick.)

Here are some other prompts I’ve used at one point or another

- If you were wrong, then what would be wrong and how would you fix it?

- Suppose you knew for a fact that future-you had solved the problem – what must be true of the solution?

- How could you do it with much less time/energy or resources to get it done? Can any ideas transfer over?

- What would a better, more capable, kinder, smarter, more virtuous version of yourself do?

Notably, none of these prompts actually bring in external knowledge – they basically boil down to “think harder so you’re more likely to figure things out” except it actually works and “think harder doesn’t. (This isn’t a particularly novel idea, by the way– talk-based therapy is basically this when it works, but I thought it was neat that it works with artificial and natural intelligence alike!) It’s not magic; just an effective way of nudging ourselves into realizing the solutions that were always within reach all along!

–

Oh, and, by the way, about half of this essay was written and/or revised with the assistance of GPT-3 itself, with a bit of gentle prompting along the way. Just thought I’d mention that ;)