My computer misbehaved so I went down a rabbit hole

I sat in the computer lab, staring at my laptop screen, wondering where things had started to unravel and go so horribly wrong.

Forty-six megabytes.

Forty-six stinkin’ megabytes.

Forty-six lousy, no-good, stinkin’ megabytes had brought down my entire computer.

I’d just tried to copy forty-six megabytes of data onto my USB, and the computer had refused.

At the time I’m writing this, a flash drive that holds a thousand gigabytes goes for a measly $35.

If you do that math, that means slightly more than one-tenth of a penny’s worth of ones and zeroes had brought my entire laptop to a standstill.

As luck would have it, I didn’t have internet access1, so emailing the file to myself or using some file-sharing service like Google Drive was out of the question.

Oh well, I figured. Might as well pull it out and plug it back in, and try again.

I clicked the button to safely eject the USB. A popup appeared, telling me I couldn’t. Huh? Oh. Right, the computer was still trying to copy the file over. I tried to stop the copying process by hitting Ctrl+C, because years of using the terminal had taught me that Ctrl+C stopped most programs.

Nothing happened.

Somehow my attempt to copy the file was still valiantly refusing to admit defeat at the hands of…whatever it was that had caused forty-six megabytes to frustrate the chunk of metal and plastic before me.

I readied the command to send the SIGKILL signal.

SIGKILL is a signal that tells running processes to stop immediately. SIGKILL is the last resort when they refuse. SIGKILL is the final word. In operating systems class, we are taught that SIGKILL does not fail. We are taught that it cannot be handled, cannot be caught, cannot be ignored. When you say SIGKILL to a process, it terminates and does not argue.

I sent SIGKILL.

My unstoppable force raced to take on the immovable object.

Nothing happened.

SIGKILL had been stopped dead in its tracks.

Great. Just great. Now what?

…

Oh. Right.

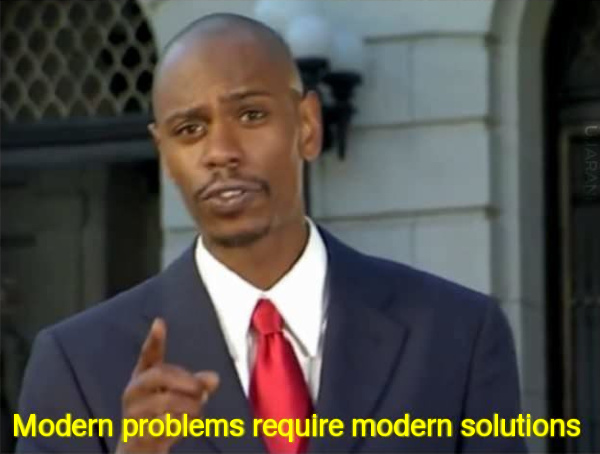

I realized there was a different way out of this mess. After spending too much time around computers and not enough time in the real world, you tend to think that only computer magic can solve computer problems. I realized I’d assumed that I had to remove the USB after doing a bunch of stuff involving the computer, but…

My thought process

But computers and the devices attached to them are still physical objects that exist in the physical world. And therefore…

I pinched the USB in my fingers and yanked it out of the laptop. Who needs safe-eject anyways?

I plugged it in again and was greeted by an array of question marks. Shortly afterwards I found out that all the data had been corrupted. And that’s why safe-eject exists. Oops.

I had clearly gone way too deep down the rabbit hole and I suspected that if I kept digging for another hour or two, I would emerge somewhere in the Indian Ocean, browsing the fifteenth page of Google results, covered in bubblewrap for some reason, babbling in Klingon about virtual memory. And yet, since I’d already come so far, I just had to keep digging anyways until I figured it out.

One of the big frustrations you come across when you deal with computer problems is that it’s not always clear whether the problem is because of some silly settings issue or if there’s actually a surprisingly interesting explanation for the bad behavior. The former can be quite frustrating to deal with, and even when you come across the fix, it might seem like such a tiny change that it’s an unsatisfying ending anyways. There are tools like debuggers that make life easier, but sometimes computers can just seem inscrutable.

I wasn’t sure what type of problem this was going to be, but I started digging anyways. And, as it turns out, it was both.

After doing some digging, during which I found that this is a surprisingly common issue (Exhibits A B C D E F), I eventually stumbled onto some answers. But first, some context.

Computers can store information and data through their file system. We interact with this every time we save a file, or download something from the internet, or record a video. But regular computer users shouldn’t need to directly access the ones and zeros that make up your data, for the same reason we don’t expect people to adjust the plumbing in their house every time they want to use the bathroom, or mess with the electrical wiring when they just want to turn on the lights. Instead, we have tools and interfaces to do these things for us: the electrical socket in the wall, the toilet and the handle to flush, and so on and so forth. They do the work for us, and we just tell them what to do (e.g. you get the plumbing system to flush the toilet by pulling the handle down, instead of having to manipulate the water inside your pipes directly).

Messing directly with memory can feel like this, except with computers it happens a million times faster

When it comes to computers, we also have these interfaces. Usually we don’t want to do the drudge-work of dealing with the ones and zeros inside our computer directly. We’d rather do things like think of abstract things like files and folders and platonic solids and sip our tea and argue about poetry. We just tell the computer to move this file there and copy that file to a new folder, and the kernel (a piece of software that makes up the core of a computer’s operating system) does that for us. The kernel does the actual work, we just tell it what to do.

Naturally, this is a much easier way of doing things than some incredibly convoluted process in which you manually change the configuration of the little magnets in your hard drive and risk accidentally wiping out decades of memories by making inadvertent eye contact with digital gremlins living inside your computer.

Regardless of whether they’re part of the kernel or if they run in userspace, computer programs get translated into a sequence of instructions that the computer carries out, one after the other2. If they’re not kernel programs, it’s fine to stop them while they’re running, or interrupt the computer and tell it “hey, carry out these special instructions before you continue with whatever you were just doing.” It’s OK to interrupt these ordinary programs because the computer will still function afterwards. If your internet browser crashes, or your game breaks, that’s unfortunate but not the end of the world. Your computer will still work, even if you get a concerned text or two from your friend with whom you were just video-chatting.

The kernel is different. It’s the engine running things. Every time you type a letter, or move your mouse, or play music, or open a file, the kernel takes over. It’s really important that the kernel code works correctly. If you mess with the kernel while it’s doing something important and something goes wrong, you might not have a functional computer afterwards.

SIGKILL is extremely effective at dealing with non-kernel (userspace) code precisely because SIGKILL has the kernel step in, stop the userspace program that’s running, and have the kernel work its magic to stop things. It has powers that regular programs don’t.

Unfortunately, since doing just about anything with the file system involves the kernel, I probably sent the SIGKILL while the kernel was still doing work. When you send a SIGKILL while the kernel is busy with an un-interruptible task, the SIGKILL does nothing until the kernel is done with its job and gives control back to the userspace program. That was why SIGKILL had failed.

But the story didn’t end there. If the kernel was moving the ones and zeros from the hard drive to the USB, how come there was no indication that it was making progress? Why didn’t the little progress bar didn’t go anywhere?

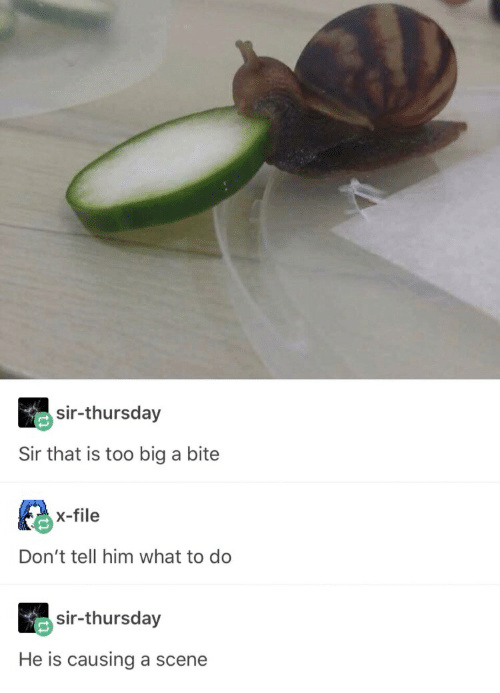

You see, normally when the kernel copies a file from one place to another, it doesn’t do the whole thing in one bite. Rather it’s supposed to do so in little chunks rather than all at once. Every so often, the kernel goes “OK, just finished copying that little chunk of data over!” and hand the reins back to the userspace code. In between processing the chunks of data, the computer will update the little progress bar and take care of lots of other invisible things in the background.

To illustrate:

The naive way to do things

Userspace: please copy these 10000000 integers

Kernel: will do

Kernel: copying all 10000000 integers…

User: wait stop

Kernel: copying all 10000000 integers…

User sends SIGKILL: STOP COPYING INTEGERS

Kernel: copying all 10000000 integers…

now we have to wait indefinitely until the kernel is done with all 10000000 integers, during which the magic ritual to move ones and zeros around cannot be interrupted

Doing things in little batches

Userspace: please copy these 10000000 integers

Kernel: will do

Kernel: copying the first 100 integers, then going to check back with you in case anything happens…

User: wait stop

Kernel: copying the first 100 integers, then going to check back with you in case anything happens…

User sends SIGKILL: STOP COPYING INTEGERS

Kernel: ok, just finished copying the first 100 integers, now I will check if anything happened in the meantime– OH MY GOODNESS A SIGKILL, TIME TO DROP EVERYTHING AND STOP THIS PROCESS AT ONCE

process stops and life continues

When it does things in little batches, the kernel takes a moment to pause and reflect on life and the nature of existence after finishing each batch. Unfortunately, in this case the computer settings made the batches very large3. The kernel ended up biting off more than it could chew, and things got extremely slow because not much else could happen in the meantime.

As a result of the batches geting too big, the kernel needed to do a lot of work before any signals would take effect, so we end up right back at the problem with the naive solution, where you can’t do a whole lot because the kernel is busy for a long time.

The fix was simple: make the kernel take smaller bites.

-

In the file

/etc/sysctl.conf, adding these two lines to the bottom:-

vm.dirty_background_ratio = 5 -

vm.dirty_ratio = 10

-

-

Then run

sudo sysctl -p

And that worked. The file transfer still took a while, but this time the kernel wouldn’t take over the system for big chunks of time and slow everything else down. I could still use my computer as it copied the file in the background.

And that was that.

Footnotes

1: I was booting Kali Linux from USB because I was learning about cybersecurity and was sniffing packets on a wifi network that I was authorized to do so on. That’s why I didn’t have internet access– I was booting from USB.

2: This is a gross oversimplification of many details but it’s accurate enough for this story.

3: Technical details

Why would anyone design an operating system where the batches get so big?

Often in the case of big, complicated systems, no individual thing makes things go wrong. Rather, it’s a number of things that have to happen in just the right configuration for the thing to suddenly blow up, and that’s why it’s helpful not to blame any one part (especially not a single scapegoat individual if there are people involved) when it’s a combination of a lot of different things.

In this case, several things had to happen, none of which caused the problem by themselves:

-

I was booting Kali Linux from USB. If it was my regular OS, I’d be able to just send the file to myself via internet and it’s also possible that it’d be quicker if I’d done things from my regular OS.

-

I tried to copy the file to a USB (USBs are notoriously slow).

-

The file is relatively large.

-

The laptop is 64-bit and the original settings controlling how much work the kernel would have to do in each batch worked quite well when we were in 32-bit-architecture-land.

Why did the batches get too big?

cp created dirty pages way faster than we could write data to the flash drive and this meant that the process could create a lot of dirty pages before needing to write them all to the USB. Due to the way the kernel works, it ended up needing to write a lot of pages to USB, and worse, it had to do a lot of pages all at once, and you can’t interrupt that because cp is in an uninterruptible sleep state while that’s going on.

Why can it dirty so much memory so quickly?

My laptop is 64-bit and it turns out that 64-bit instead of 32-bit matters a lot, because this affects the way we count memory, and how fast you can create dirty pages is currently described as a percentage of total memory by default, and it happens that the 64-bit way of counting memory means you can create a large amount of dirty pages very fast.

From the first linked article:

Two other details are key to understanding the behavior described by Artem: (1) by default, the percentage-based policy applies, and (2) on 32-bit systems, that ratio is calculated relative to the amount of low memory — the memory directly addressable by the kernel, not the full amount of memory in the system.

The fix (see above) worked because it ensured that we wouldn’t overwhelm the buffers.

Further reading: