Making computers feel safe

When you use a computer, how do you feel?

That’s an odd question, isn’t it? We’re not used to thinking about how we feel when we use computers. We’re focused on what’s on the screen. In the moment, we exist from the neck up only. We don’t notice the gut feeling of excitement when we’re about to watch a good movie, or play a video game, or maybe the not-quite-boredom of doing digital housekeeping. We don’t notice when computers make us feel worried or anxious.

Worried? When was the last time you felt worried interacting with a computer?

Let me spin a little scenario.

The scientist

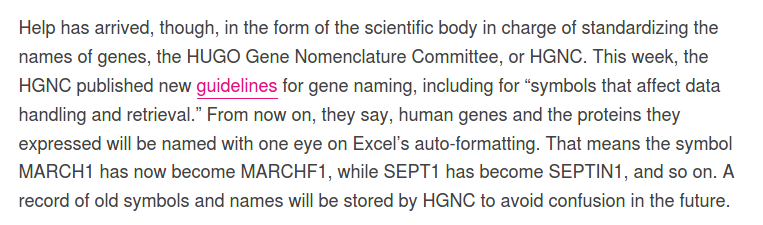

You are a scientist, toiling away in your lab, researching the next bit of chemistry that lets rockets fly a little further, or the next step towards curing a genetic disease, or a new type of glue that lets you cleanly peel stickers off without leaving an annoying sticky residue. You patiently add data and measurements to a massive spreadsheet, observation after observation. It’s close to the end of your day and your head is tired from keeping track of it all. You add one last number, and out of the corner of your eye you see a column on the other side of the screen flip.

You blink, and look again. Nope, no change. Or was there? Did you accidentally trigger some formula, working invisibly in the background? Is your formula right? It’s late in the day. You blink, squint, and look again. Did you lose some data by mistake? Are you sure that you did it right? You blink again. The deadline is soon. You need the data and can’t afford mistakes. Those were some expensive experiments. You squint and look again at the column, then back at the number you just entered. Wait, where’d it go?

Now, imagine this hypothetical scenario running through your head, every time just before you type anything. Worried yet?

If you thought “just don’t make mistakes” could work at scale, consider that entire fields of scientific research had to change to accommodate software– software! Source

Keep in mind that in this scenario, you use the computer regularly as part of your job, so you’re probably more comfortable than the average person at using it. What if your day-to-day doesn’t require you to use computers, and then once in a while you have to navigate a confusing ocean of buttons, clicks, and screens? It’s nice to imagine a future when everyone is technologically literate, but what happens between now and then?

How about if you have no way of telling what, precisely, you changed today versus yesterday versus the day before, so you don’t know what to undo if you realize you made a mistake? How about if you accidentally spill water on your computer? What if your computer hardware fails? Do you have backups of the data? (Oh, you do have backups? Excellent, have you tested that your backups actually work? No? Then you don’t have backups.)

Even if you’re skilled at using a computer, how about if your task is working on a spreadsheet with years and years worth of financial data, and if you mess up, you’re on the hook for millions or billions of dollars? What if human lives are directly on the line?

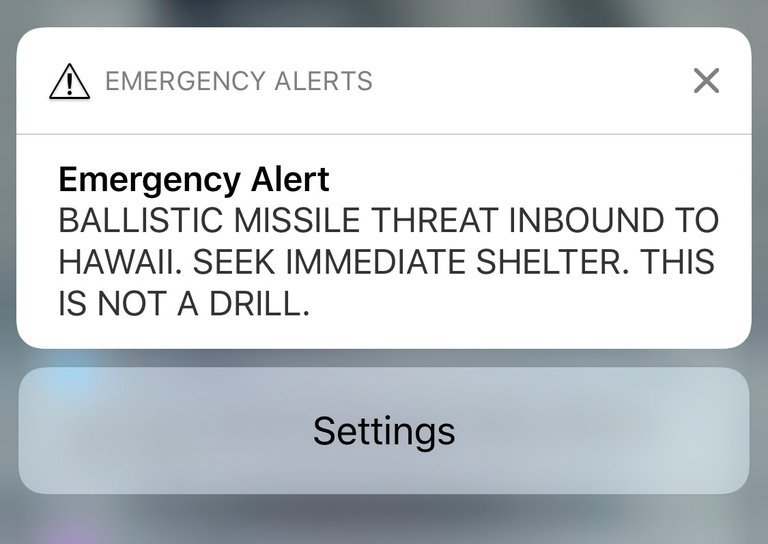

Hawaii

In early 2018, the people in Hawaii received an alert that a ballistic missile was on the way and they should seek shelter immediately.

It took thirty-eight terrifying minutes for a follow-up explaining that this was a false alarm because a government employee made a mistake and failed to say it was a drill. It’s tempting to just blame the employee, but what about the computer system? It was set up so that a single person was able to make this happen. How confident would you be if this was your job, and you were the only thing standing between a normal drill and an incident like this?

Humans are fallible, and the lesson to learn is not that we just need to try harder, but rather look at how we put someone in a position to make a mistake like this with no guard rails.

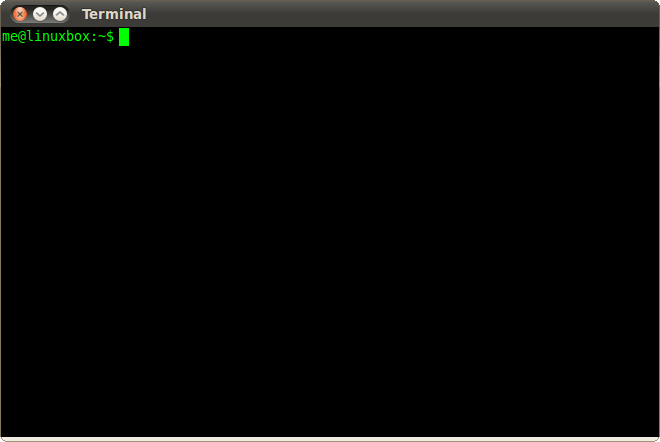

Introductory programming

Teach introductory programming and computer science long enough and you’ll find out plenty of students worry about things that experienced programmers take for granted. Imagine, for a moment:

A student whose first programming homework is due in three days, and this is the first time they encounter an unblinking cursor floating alone in an ocean of black.

When you’re first learning computer programming, your first loop feels like an incredibly powerful tool. Having the computer do something over and over again, for ten times, a hundred times, a thousand, a hundred thousand, all in the blink of an eye– it’s a big deal. It feels as though you’re an early human from millions of years ago, banging two rocks together, and suddenly a few sparks fly out and you have no idea what just happened or what to make of it but something big just happened and you know it.

Now imagine your first infinite loop. The program never ends, and the computer spews out text until it covers the screen. If you’re comfortable with programming, you know that you can just click the stop button and interrupt the program and you’ll basically be fine. You’re not going to overwrite any important data, you’re not going to burn out your hardware, you’re not going to break things. How hard could it be to find the stop button and click it?

“Just” find the button that does what you want, how hard could it be?

Where do we go from here?

It’s a curse of computing that we’re used to slightly badly-behaved software, things that set us on edge just a bit but aren’t broken enough to really be worth complaining about. We don’t notice how we feel until someone points it out, because we’re used to just making do with what we have. Our interactions are held together with duct tape, bubblegum, and prayers that it’ll work. To many of us, the computer is a silicon god, and on some level it feels like all its quirky, surprising, unexpected behavior is simply the whim of a higher being we cannot hope to comprehend.

Is that the best we can do, though? Imagine, for a moment, if you could have all of these in a computer program:

-

Does what you want it to do, always (or at least almost always)

-

Doesn’t fail catastrophically, wiping out important data or work or failing at the worst possible time

-

If things go wrong, you know immediately instead of two months after the fact and it blows up in your face

-

If things go wrong, you not only know what went wrong but also how it got that way, why it’s wrong, and how to fix it.

We’d feel a lot safer with this, wouldn’t we?

It’s worth mentioning here that, to be fair, we can’t remove all the danger from computers. Power tools are powerful. So long as you have the ability to delete one piece of data, you have the ability to delete everything by mistake. So long as you can make the computer do repetitive data manipulation at breakneck speeds, it can hugely magnify small, human mistakes in a way that no human assistant can. What then?

Hold off on that for a moment, and think about how we teach any other topic. Any teacher, coach, tutor worth their salt understands the difference between learning a bad habit, making an irreversible mistake, and making small, nitpick-able mistakes. Being picky is perfectly fine, but there’s a good reason why excellent teachers know when and how to reassure their students that the basics are solid before nitpicking the details. Students can fix bad habits, but it’s really, really hard to fix being scared to practice because you’re scared of picking up bad habits.

In the same way, safety in computing isn’t about terrifying people into not being able to use the computer “properly”, but rather helping them become first-class digital citizens in their own right. “This is unsafe” is unhelpful on its own. Getting out of bed too fast is unsafe. Crossing the street is unsafe. Drinking water is unsafe. How unsafe is it? What are the risks? Which ones can you afford? Which ones do you have to insure against?

You can try to forbid people from using digital password managers for their pet dog’s social media account just because password managers aren’t perfectly secure against national-level hackers. In the end, that just means they’ll write the passwords on post-its and put them up in plain sight and then you’ll forget to hide them when the media comes to have a photoshoot and suddenly you’ve been exposed to the entire world.

By the way, in case you thought that seems like an oddly specific example to cite, keep in mind that post-it password leaks have actually happened in real life. These aren’t isolated incidents, and the lesson here is not to simply blame the humans involved and assume our work is done. It’s happened with BBC News, the Super Bowl (for my international readers, a major American sporting event), and even– wait for it– the Hawaii missile alert system I mentioned earlier.

Despite how tempting it is to boil safety down into a few catchy principles, there’s no denying that there’s still an art to it. Like it or not, we can’t checklist our way to a feeling. That’s frustrating, since it’s not always obvious what the right choice is. That’s what makes it tempting to throw your hands up in the air and announce that, handling errors is hard and writing reliable code is hard and feeling safe is hard and predicting how things can go wrong is hard and predicting how people act is hard and computers are hard and everything is hard, but that’s rolling over and giving up and I, for one, have no plans to do so.